What is this fuss around OpenAI? Here is a takeway.

My take on the fuss around OpenAI is that what happens is at most highly symbolic. I will give you : one analysis, one question and one takeaway.

One Analysis

What I heard in Satya Nadella’s latest post on LinkedIn is :

📢 « We remain committed to our partnership with OpenAI »

🙊 OpenAI won't see the color of the largest part of the B$ that were planned

📢 « and have confidence in our product roadmap, our ability to continue to innovate with everything we announced at Microsoft Ignite, and in continuing to support our customers and partners. »

🙊 Markets! We will still deliver on GPT products that have nothing to do with research on AGI and will bring massive $ as soon as next quarter.

📢 « We look forward to getting to know Emmett Shear and OAI's new leadership team and working with them. »

🙊 We couldn't take over OpenAI's structure so this partnership will slowly die while...

📢 « And we’re extremely excited to share the news that Sam Altman and Greg Brockman, together with colleagues, will be joining Microsoft to lead a new advanced AI research team. We look forward to moving quickly to provide them with the resources needed for their success. »

🙊 ... we are building our own solution to remove our dependency on OpenAI and will invest twice the B$ in it.

One Question

If mankind requires a whole lot of long-term investment, in any form, to achieve progress, is it possible to attain it without getting lost in short-term interest in the process?

(As you can guess, the topic of this question is much larger than LLMs !)

One Takeaway

There is panic around existing investments in OpenAI’s technology. The takeaway is simple : Do not rely on an external vendor for what you should own.

You should be able to have people and processes in place to document your ways of working, have transparent, structured knowledge sharing practices.

If GenAI or any other technology clearly adds value, you should be able to plug/unplug any external vendor as a commodity provider, meaning that :

You should own every piece of intelligence, its augmentation and its structure, and not depend on any third party. As in, there are market data providers but you should build your own market knowledge, or there are domain-specific analytics & marketing companies but you should own the way you define your customer segments, your evaluation of marketing campaigns, what the churn is for your specific business offer.

CEOs and business teams should own (or have their tech teams explain them until they own) a systemic understanding of their existing and envisioned tech choices: inputs, outputs, maintenance skills, legal issues.

Yes, it means strategic, sovereign knowledge to own, but building a significant part of your organization on someone else’s business model is not risk-free.

What is your take ? Feel free to start the discussion in comments.

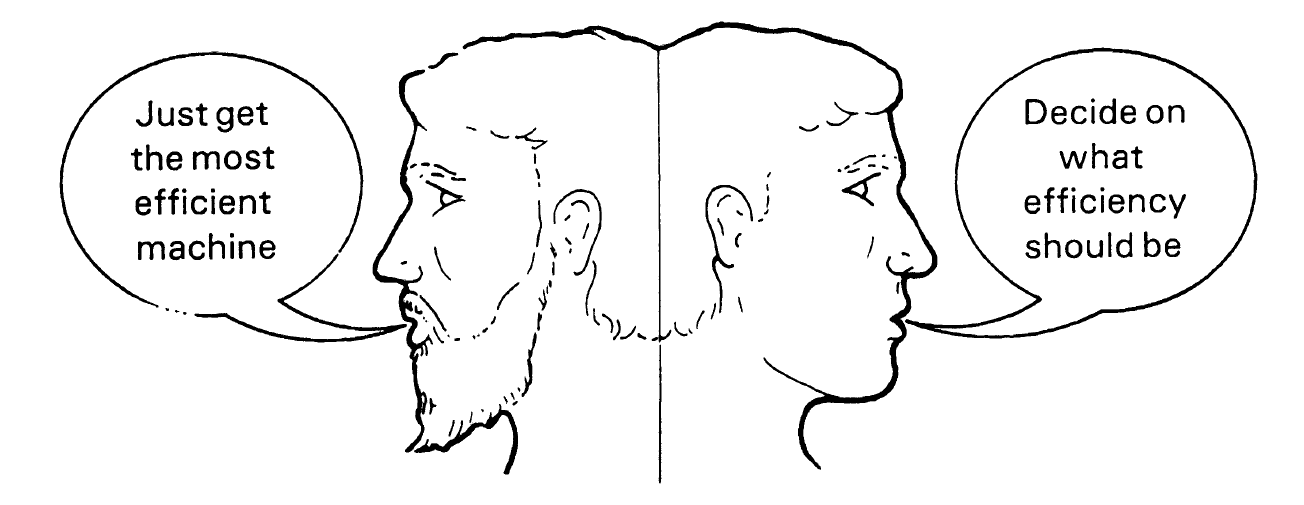

The illustration for this article is one of the multiple opposite ways of considering science or technology. It is extracted from Bruno Latour’s « Science in Action: How to Follow Scientists and Engineers Through Society », which is a major reference for researching the gap between Science & Technology and all the other specialties who think it has nothing to do with them.